How Could AI-Generated Content Impact the 2024 Election?

This is not a hypothetical question or distant possibility; AI-generated content is already influencing voters. Although many state and federal lawmakers are scrambling to safeguard the upcoming election, a growing number of experts are sounding the alarm, warning that the U.S. is woefully unprepared for the growing threat from AI-generated propaganda and disinformation. In the 14 months since ChatGPT’s debut, this new AI technology is flooding the internet with lies, reshaping the political landscape and even challenging our concept of reality.

Here are just a few recent examples of AI in action on the campaign trail:

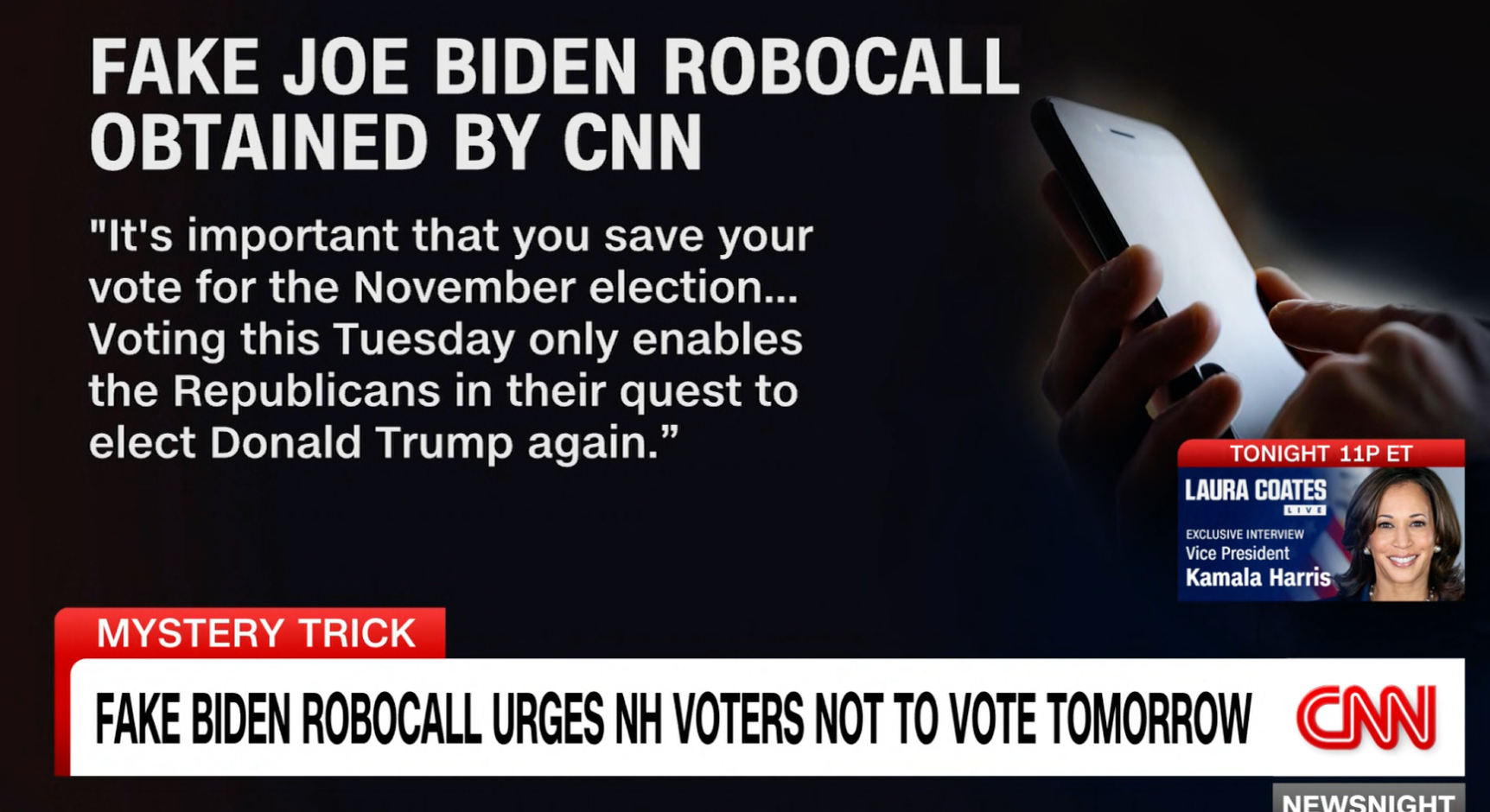

Two days before the primary election in New Hampshire, voters received calls from a fake President Biden telling them not to vote. The voice impersonating the president was almost certainly AI-generated.

CNN

Florida Gov. Ron DeSantis mixed authentic photos with AI-generated images of Donald Trump embracing and even kissing Dr. Anthony Fauci (a deeply loathed figure in the MAGA world) to discredit the former president.

AFP

The Republican National Committee went even further by creating a 100 percent AI-generated video warning voters of what could happen during President Biden’s second term. The 30-second ad features ominous music and fake apocalyptic images, including the National Guard implementing martial law in San Francisco to crack down on escalating crime.

RNC

But political campaigns are also using AI for non-nefarious tasks, like writing speeches and fundraising emails. What used to take staffers hours now takes minutes. Other AI tools are helping them target individual voters at a granular level that would have been too time-consuming in the past. By analyzing voter databases and demographic information, AI can figure out which issues people care about and what will get them to the polls. AI can also translate campaign materials into any language to reach non-English speakers–kind of like that universal translator on Star Trek for all you Trekkies out there.

This technology is also revolutionizing traditional outreach strategies. AI-powered robocallers can now connect with potential voters, but unlike that prerecorded robocall in New Hampshire, an AI robocaller has actual conversations with people. According to Reuters, an artificial intelligence volunteer known as Ashley called thousands of voters in Pennsylvania on behalf of Shamaine Daniels, a Democrat running for a House seat. (You can listen to the conversation here.)

Another bonus is that Ashley is always on time, never flustered by policy questions and undeterred by rude responses. But her undeniable superpower is that she can have an unlimited number of one-on-one phone conversations–simultaneously.

Civox

A company called Civox invented Ashley, and its CEO, Ilya Mouzykantski, told Reuters he expects his AI robocallers to make hundreds of thousands of calls for candidates this year. “The future is now,” said the 30-year-old Mouzykantski. “It’s coming in a very big way.”

It’s also coming way too fast for government regulators who are struggling to keep pace with artificial intelligence. So far, there is no federal law prohibiting the use of AI-generated content in national elections, though legislators from both parties have introduced at least four bills targeting deepfakes and other deceitful media. The Federal Election Commission is also considering whether it has the authority to ban certain types of AI content used by candidates or their proxies.

The regulatory response is further along at the state level. Minnesota, California, Texas, Washington, and Michigan have laws banning or restricting deepfakes and other AI-created content in political ads. At least 14 other states also have legislation in the works to limit this type of deceptive content when it comes to elections.

But these laws may not be enough to stop the political disinformation already flooding the internet. Chatbots are making it easier and faster to produce false content, giving both domestic and foreign entities the opportunity to exploit this technology for political gain.

The good news is that the public is aware of the threat. According to a Northeastern University poll, 83 percent of respondents are worried about AI-generated misinformation during the 2024 election. Here’s the problem with that: those concerns about AI content pose another risk known as the liar’s dividend. The liar’s dividend happens when people are exposed to a great deal of fake information that looks realistic. As they become more vigilant about spotting deepfakes and other deceptive content, they also become more likely to dismiss real information and genuine images. It’s a troubling paradox: the more skeptical the public, the easier it is to convince them what’s actually real is not.

“Unscrupulous public figures or stakeholders can use this heightened awareness to falsely claim that legitimate audio content or video footage is artificially generated and fake,” said Josh Goldstein in his article, Deepfakes, Elections and Shrinking the Liar’s Dividend.

Blaming AI for embarrassing slip-ups is already happening in the U.S. When the Lincoln Project launched an ad showing former President Donald Trump confused and struggling multiple times to pronounce the word anonymous, he accused them of using artificial intelligence to make him “look as bad and pathetic as Crooked Joe Biden” even though the gaffes are entirely real and well documented.

Feeble/YouTube

The stakes for the 2024 election are huge. Many Americans are distrustful of both the news media and the political process, thanks to misinformation about the 2020 election. The ability of voters to agree on a common set of facts is already challenged, and bad actors now have new tools to further polarize the public and influence the outcome of the election. It’s not hard to imagine how deeply dangerous AI-generated content could be.

“We know that we are facing choices that are very fundamental in this election, perhaps even the future of American democracy,” said Darrell West, the author of How AI will transform the 2024 elections, in an interview on WBUR’s program, On Point. “My greatest fear is this election gets decided based on disinformation.”

This article was originally published on News Literacy Matters.

Award-winning international business journalist Sissel McCarthy is a Distinguished Lecturer and Director of the Journalism Program at Hunter College and founder of NewsLiteracyMatters.com, an online platform dedicated to teaching people how to find credible information in this digital age. She has been teaching news literacy and multimedia reporting and writing for more than 16 years at Hunter College, NYU, and Emory University following her career as an anchor and reporter at CNN and CNBC. McCarthy serves on the board of the Association of Foreign Press Correspondents.